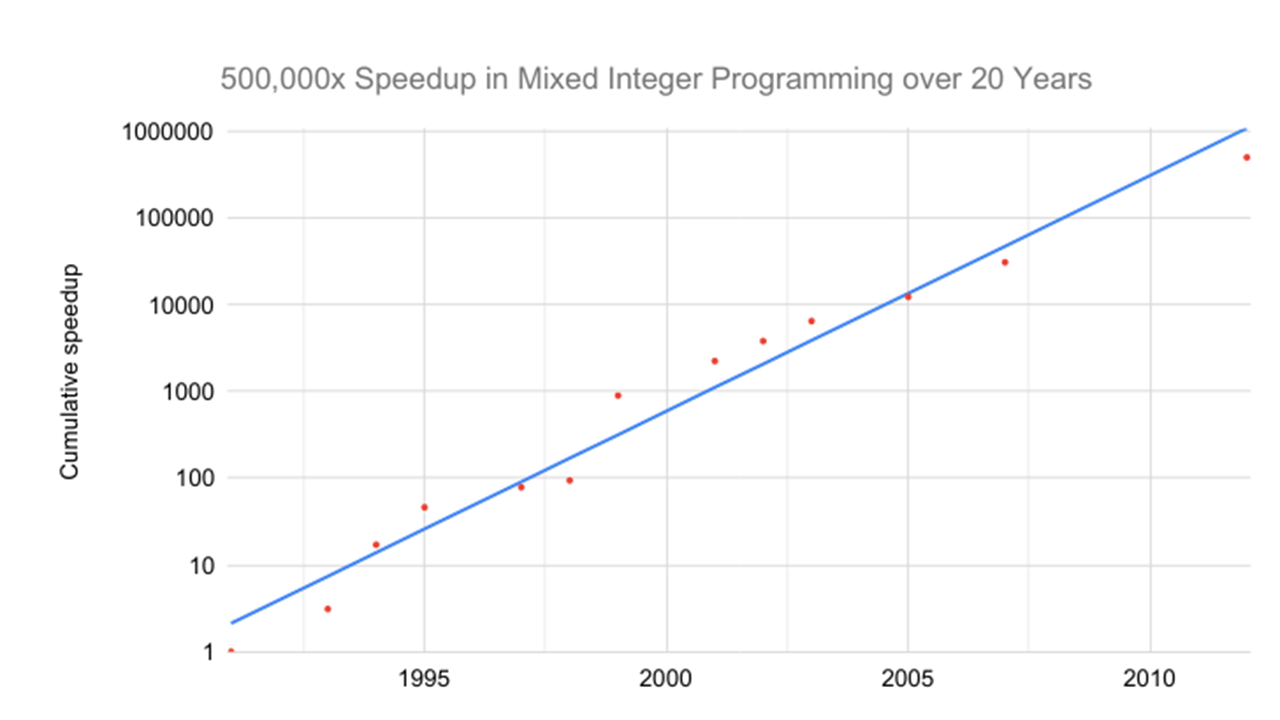

Artificial intelligence (AI) has made great strides in recent years, with algorithms improving every year. According to the work of OpenAI, the non-profit organization dedicated to artificial intelligence founded by Elon Musk and Sam Altman, AI algorithms follow an evolutionary curve which could be described as evolution provided by Moore’s law for the number of transistors in an integrated circuit.

Gordon Moore is the co-founder of Intel. In 1965, he stated in his founding article that the number of transistors in an integrated circuit would double every year, which is known as Moore’s Law. Today, 50 years after this declaration, even if many people think that Moore’s Law is dying, Intel‘s processors are able to deliver 3500 times more performance than they could provide in their version of 1965. So far, no other technology has improved at such a rate.

The very rapid evolution of processors has given birth to a by-product, artificial intelligence, which has gradually become an area in its own right. The progress of algorithms has also progressed at a rate that resonates with the success of integrated circuits. However, there is no global measure to measure algorithmic innovation. According to OpenAI’s observations, parameters are usually measured based on precision or a grade. She then became interested in this subject, but under a particular aspect: “algorithmic efficiency”.

“Improving the algorithm is a key factor in the progress of AI. It is important to look for measures that highlight overall algorithmic progress, although it is more difficult to measure such trends in IT, “OpenAI wrote in a report.

She studied recent AI successes and recommended a few steps that can impact its progress. But before that, she first identified the factors that make it difficult to create a measure that can track the overall progress of artificial intelligence.

Here are the different points that OpenAI raised in its report:

- It is not practical to make such an analysis for a deep learning, because one seeks approximate solutions;

- Performances are often measured in different units (precision, BLUE, loss of cross entropy, etc.) and the gains on many parameters are difficult to interpret;

- The problems are unique and their difficulties are not quantitatively comparable, so the evaluation requires an idea of each problem;

- Most research focuses on communicating overall performance improvements rather than efficiency improvements, so more work is needed to unravel the gains due to the algorithmic efficiency of the gains due to the additional computations;

- The rate at which the new benchmarks are resolved compound the problem.

Source : Study report (pdf)